Project 1: Fruits Classification

Fruit classification plays an important role in many industrial applications including factories,

supermarkets and other fields. The importance of fruit classification can also be seen

among people with dietary requirements to assist them in selecting the correct categories of fruits.

- Choose the different classes (at least seven classes) from the 131 classes of the

Fruits 360 dataset, Report the train, validation and test results.

Justify your classification results on test dataset by using AUC of ROC curve.

Also provide the relevant performance metrics such sensitivity, etc.

- Change the selected classes to Pear (different varieties, Abate, Forelle, Kaiser, Monster, Red, Stone,

Williams) or Apples (different varieties: Crimson Snow, Golden, Golden-Red, Granny Smith, Pink Lady,

Red, Red Delicious), and report the results providing relevant metrics.

Justify the difference between the results of this part and the previous.

Justify your classification results on test dataset by using AUC of ROC curve. Also provide the relevant performance metrics such sensitivity, etc.

Justify the difference between the results of this part and the previous.

Dataset Specifications:

Filename format: image_index_100.jpg (e.g. 32_100.jpg) or r_image_index_100.jpg

(e.g. r_32_100.jpg)4 or r2_image_index_100.jpg or r3_image_index_100.jpg.

The total number of images: 90483.

The number of classes: 131 (fruits and vegetables).

Download the dataset from the link below:

Fruits 360 | Kaggle

Research Papers:

Horea Muresan, Mihai Oltean, Fruit recognition from images using deep learning, Acta Univ. Sapientiae, Informatica Vol. 10, Issue 1, pp. 26-42, 2018.

Project 2: Human Action Detection

Classification of human activities is one of the emerging research areas in the field of computer vision.

It can be used in several applications including medical informatics, surveillance, human computer interaction, and task monitoring.

- Choose the different classes (at least seven classes) from the 15 classes of the

Human Action Detection dataset, Report the train, validation and test results.

Justify your classification results on test dataset by using AUC of ROC curve.

Also provide the relevant performance metrics such sensitivity, etc.

- Change the selected classes (running, dancing, fighting, cycling, hugging, drinking and eating),

and report the results providing relevant metrics.

Justify the difference between the results of this part and the previous.

Justify your classification results on test dataset by using AUC of ROC curve. Also provide the relevant performance metrics such sensitivity, etc.

Justify the difference between the results of this part and the previous.

Dataset Specifications:

The total number of images: 18000.

Training set size: 15000 images (one action per image).

Test set size: 3000 images (one action per image).

The number of classes: 15 (different actions).

Download the dataset from the link below:

Human Action Detection - Artificial Intelligence | Kaggle

Research Papers:

Dash, S.C.B., Mishra, S.R., Srujan Raju, K. et al. Human action recognition using a hybrid deep learning heuristic.

Soft Comput 25, 13079–13092 (2021).

Project 3: Echocardiography View Classification

In echocardiography, many canonical view types are possible,

each displaying distinct aspects of the heart's complex anatomy.

As part of routine clinical care, when images are taken the sonographer is intentionally

capturing a specific view, but the annotation of the view type is not applied to the image or recorded in

the electronic record. Thus, from raw data alone it is difficult to focus on a specific anatomical view of

interest.

- Use TMED-1 to make 3 possible view-type labels available: PLAX, PSAX,

or other (meaning something else other than PLAX or PSAX).

- Use TMED-2 to find the following classes: PLAX, PSAX, A2C, A4C, or Other

- Report the train, validation and test results. Justify your classification results on test dataset by using AUC of ROC curve.

Also provide the relevant performance metrics such sensitivity, etc.

Dataset Specifications:

The TMED dataset contains transthoracic echocardiogram (TTE) imagery acquired in the course of

routine care consistent with American Society of Echocardiography (ASE) guidelines,

all obtained from 2011-2020 at Tufts Medical Center.

TMED-1 : set: 260 patients

TMED-2 : set: 577 patients

Download the dataset from the link below:

Data Access | Tufts Medical Echocardiogram Dataset (TMED)

Research Papers:

Neda Azarmehr, Xujiong Ye, James P. Howard, Elisabeth S. Lane, Robert Labs, Matthew J. Shun-Shin,

Graham D. Cole, Luc Bidaut, Darrel P. Francis, Massoud Zolgharni, "Neural architecture search of

echocardiography view classifiers," J. Med. Imag. 8(3) 034002 (22 June 2021).

Zhe Huang, Gary Long, Benjamin Wessler, and Michael C. Hughes”A New Semi-supervised Learning Benchmark for Classifying View and Diagnosing Aortic Stenosis from Echocardiograms,”In Proceedings of the 6th Machine Learning for Healthcare (MLHC) conference, 2021.

Project 4: Forest Cover Segmentation

Forest segmentation finds its application in Land use/Land cover. e.g Forest cover is the amount of

land area that is covered by forest. It may be measured as relative or absolute. We can do this by identifying the forest

regions and develop a functionality to measure the forest cover from the satellite images itself.

- Segment the forest areas in the satellite images, Report the train,

validation and test results. Also provide the relevant performance metrics.

Dataset Specifications:

This dataset contains 5108 aerial images of dimensions 256x256

The meta_data.csv file maintains the information about the aerial images and their respective binary mask

images

Download the dataset from the link below:

Forest Aerial Images for Segmentation | Kaggle

Research Papers:

Gritzner, Daniel, and Jörn Ostermann. "SegForestNet: Spatial-Partitioning-Based Aerial Image Segmentation." arXiv preprint arXiv:2302.01585 (2023).

Project 5: Full Body Segmentation

Human segmentation often use the outcome of person detection in the video.

Segmentation and tracking of the person in the video have significant applications in monitoring and

estimating human pose in 2D images and 3D space. The Martial Arts, Dancing and Sports (MADS) dataset,

which consists of martial arts actions (Tai-chi and Karate), dancing actions (hip-hop and jazz),

and sports actions (basketball, volleyball, football, rugby, tennis and badminton).

- Segment the person in each image, Report the train,

validation and test results. Also provide the relevant performance metrics..

Dataset Specifications:

A total of 1192 images and masks.

Download the dataset from the link below:

Segmentation Full Body MADS Dataset | Kaggle

Research Papers:

Le, V.-H.; Scherer, R. Human Segmentation and Tracking Survey on Masks for MADS Dataset. Sensors 2021, 21, 8397.

Project 6: Right/ Left ventricular Segmentation in Echocardiography dataset

The segmentation of Left Ventricle (LV) is currently carried out manually by the experts,

and the automation of this process has proved challenging due to the presence of speckle noise and the

inherently poor quality of the ultrasound images. The CAMUS dataset, containing 2D apical four-chamber

and two-chamber view sequences acquired from 500 patients.

The endocardium and epicardium of the left ventricle and left atrium wall are contoured by experts.

- Segment the left ventricle endocardium (LVEndo), the myocardium (epicardium contour more specifically, named LVEpi) and the left atrium (LA), Report the train,

validation and test results. Also provide the relevant performance metrics.

Dataset Specifications:

The overall CAMUS dataset consists of clinical exams from 500 patients and comprises : i) a training set of 450 patients along with the corresponding manual references based on the analysis of one clinical expert; ii) a testing set composed of 50 new patients.

The raw input images are provided through the raw/mhd file format.

Download the dataset from the link below:

Human Heart Project (insa-lyon.fr)

Research Papers:

Azarmehr, N., Ye, X., Sacchi, S., Howard, J.P., Francis, D.P., Zolgharni, M. (2020). Segmentation of Left Ventricle in 2D Echocardiography Using Deep Learning, Medical Image Understanding and Analysis. MIUA 2019.

S. Leclerc et al., "Deep Learning for Segmentation Using an Open Large-Scale Dataset in 2D Echocardiography," in IEEE Transactions on Medical Imaging, vol. 38, no. 9, pp. 2198-2210, Sept. 2019, doi: 10.1109/TMI.2019.2900516

Project 7: Product Checkout Dataset Object Detection

Over recent years, emerging interest has occurred in integrating computer vision technology into the

retail industry. Automatic checkout (ACO) is one of the critical problems in this area which aims to

automatically generate the shopping list from the images of the products to purchase. A Large-Scale Retail

Product Checkout Dataset(RPC) is the largest one, containing a significant number of product images across various categories.

- Detect object in the test dataset as checkouts. Report the train,

validation and test results. Also provide the relevant performance metrics.

Dataset Specifications:

This dataset contains 200 product categories and 83,739 images. It includes single-product

images taken in controlled environment and multi-product checkout images taken at the checkout counter.

Various annotations are provided for both single-product images and checkout images.

Download the dataset from the link below:

Detector Working | Kaggle

Research Papers:

Wei, XS., Cui, Q., Yang, L. et al. RPC: a large-scale and fine-grained retail product checkout dataset. Sci. China Inf. Sci. 65, 197101 (2022).

Project 8: Fruit Images for Object Detection

A fruit dataset for object detection 3 different fruits: Apple, Banana, Orange.

- Detect object in the test dataset(Orange, Banana and apple in bounding box). Report the train,

validation and test results. Also provide the relevant performance metrics.

Dataset Specifications:

240 training images 60 test images.

3 different types of fruits: Apple, Banana, Orange.

.xml files in data have coordinates of objects.

Download the dataset from the link below:

Fruit Images for Object Detection | Kaggle

Research Papers:

Sa I, Ge Z, Dayoub F, Upcroft B, Perez T, McCool C. DeepFruits: A Fruit Detection System Using Deep Neural Networks.

Sensors (Basel). 2016 Aug 3;16(8):1222.

Mureșan, Horea & Oltean, Mihai. (2018). Fruit recognition from images using deep learning. Acta Universitatis Sapientiae, Informatica. 10. 26-42. 10.2478/ausi-2018-0002.

Project 9: Early Myocardial Infarction Detection over Multi-view Echocardiography

Myocardial infarction (MI) is the leading cause of mortality in the world that occurs

due to a blockage of the coronary arteries feeding the myocardium. An early diagnosis

of MI and its localization can mitigate the extent of myocardial damage by facilitating early

therapeutic interventions. HMC-QU dataset is the first publicly shared dataset serving myocardial

infarction detection on the left ventricle wall. The dataset includes a collection of apical 4-chamber

(A4C) and apical 2-chamber (A2C) view 2D-echocardiography recordings.

- Propose a method to detect MI over:

- Single-view echocardiography by using the information extracted from A4C or A2C views.

- Multi-view echocardiography by merging the information from A4C or A2C views.

- Report the train, validation and test results. Also provide the relevant performance metrics.

- Single-view echocardiography by using the information extracted from A4C or A2C views.

- Multi-view echocardiography by merging the information from A4C or A2C views.

Dataset Specifications:

The dataset includes a collection of apical 4-chamber (A4C) and apical 2-chamber (A2C) view 2D

echocardiography recordings obtained during the years 2018 and 2019. The echocardiography recordings are acquired via devices from different vendors that are Phillips and GE Vivid (GE-Health-USA) ultrasound machines. The temporal resolution (frame rate per second) of the echocardiography recordings is 25 fps. The spatial resolution varies from 422x636 to 768x1024 pixels.

The dataset consists of 162 A4C view 2D echocardiography recordings.

The A4C view recordings belong to 93 MI patients (all first-time and acute MI) and 69 non-MI subjects.

The dataset consists of 130 A2C view 2D echocardiography recordings that belong to 68 MI patients and 62 non-MI subjects.

Download the dataset from the link below:

HMC-QU Dataset | Kaggle

Research Papers:

Degerli, M. Zabihi, S. Kiranyaz, T. Hamid, R. Mazhar, R. Hamila, and M. Gabbouj, "Early Detection of Myocardial Infarction in Low-Quality Echocardiography," in IEEE Access, vol. 9, pp. 34442-34453, 2021, https://doi.org/10.1109/ACCESS.2021.3059595.

S. Kiranyaz, A. Degerli, T. Hamid, R. Mazhar, R. E. F. Ahmed, R. Abouhasera, M. Zabihi, J. Malik, R. Hamila, and M. Gabbouj, "Left Ventricular Wall Motion Estimation by Active Polynomials for Acute Myocardial Infarction Detection," in IEEE Access, vol. 8, pp. 210301-210317, 2020, https://doi.org/10.1109/ACCESS.2020.3038743.

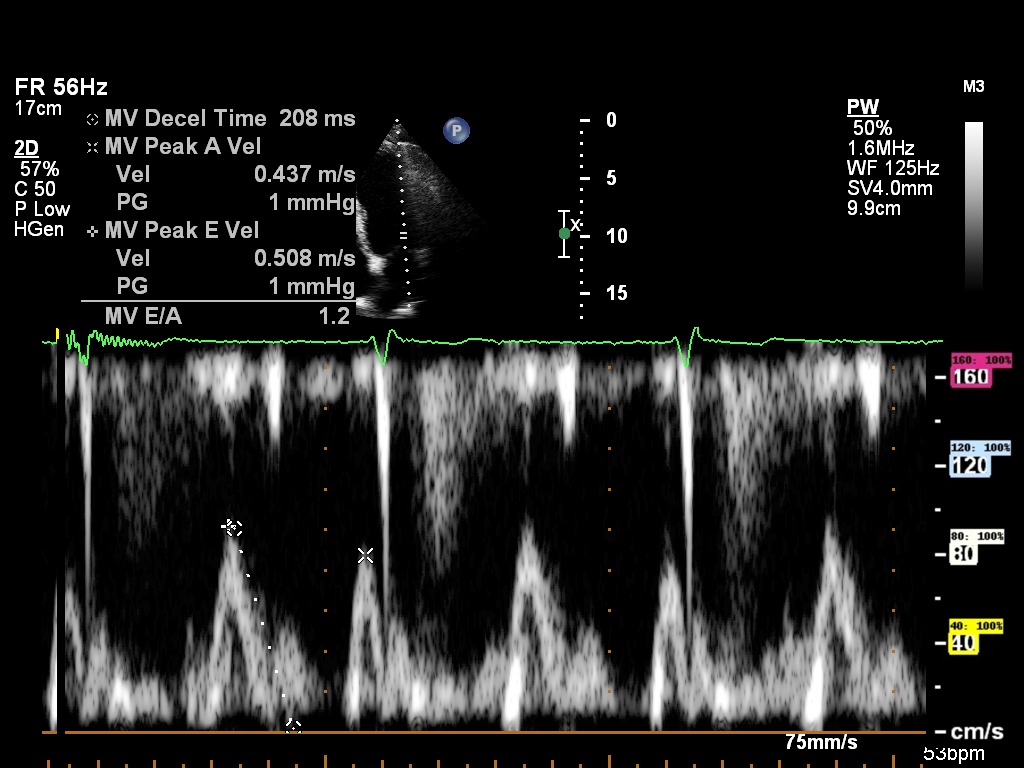

Project 10: Doppler Mitral inflow echocardiographic_ pixel to velocity

The project involves working with Doppler Mitral inflow echocardiographic images. The images show measurements of the blood flow velocity.

Currently, AI models can detect and measure necessary parameters on Mitral echocardiographic images, but measurements are acquired in pixel values, while in order to adapt the techniques to real-world scenario,

automated measurements should be converted to velocity values.

Usually, there is no way of getting the pixel-to-velocity conversion rate apart from the images themself.

Echocardiographic images contain velocity values, as well as the 0-line, so the project requires student to create a deployable model that will process echocardiographic images and will lead to pixel-to-velocity conversion rate.

Student is free to use any available computer vision techniques,

as long as they can be deployed and incorporated into a larger Deep Learning pipeline.

Student will also be able to have some initial consultation with members of IntSav team, and will work at the AI-lab.

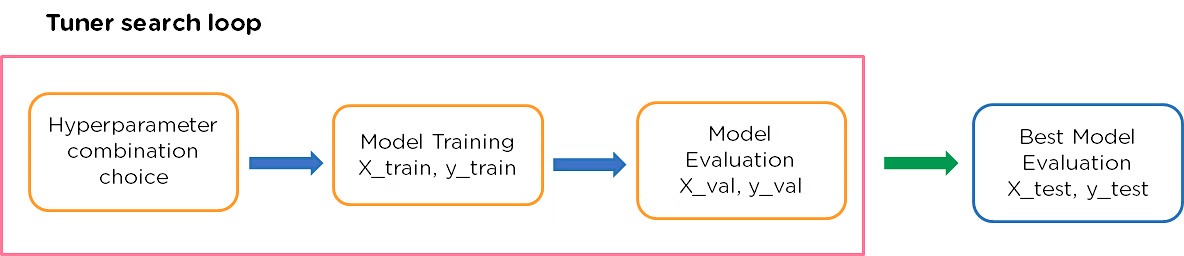

Project 11: Advanced Optimization of Convolutional Neural Networks for Echocardiographic Image Analysis using Automated Hyperparameter Tuning

Introduction:

In the field of medical imaging, particularly echocardiography, Convolutional Neural Networks (CNNs) have shown promising

potential to enhance diagnostic processes through accurate image classification and segmentation. However, the optimal

performance of CNN models is dependent on the precise tuning of hyperparameters, a task that is traditionally time-consuming

and requires extensive experiments. This project proposes the use of automated hyperparameter optimization (HPO) techniques to

streamline the optimization process, aiming to improve the efficiency and accuracy of CNN models for echocardiographic analysis.

By leveraging advanced HPO methods and tools such as Keras Tuner and Optuna, the project seeks to eliminate the manual tuning bottleneck,

fostering advancements in automated medical diagnostics.

Aims:

- To review and assess the current landscape of CNN architectures and HPO techniques, focusing on their applications and

performance in medical imaging, specifically echocardiography.

- To implement and evaluate automated HPO strategies to enhance

CNN models for echocardiographic view classification and image segmentation, comparing their effectiveness against traditional manual tuning methods.

Significance:

This project aims to significantly advance echocardiographic analysis by automating CNN model optimization,

enhancing clinical diagnostics and making advanced AI tools more accessible to medical applications and researchers.

Implementation and Datasets:

You can implement automated hyperparameter tuning for the following tasks:

- Echocardiography View classification using the description and dataset in Project 3.

- Left ventricular Segmentation in Echocardiography using the description and dataset in Project 6.