Project Introduction

Laryngeal bioimpedance can deliver unique information about human

voice and phonation. This project is comprised of two aspects: the development of a

self-calibrating laryngeal bioimpedance measurement system and its application in voice

features extraction. The implementation of Artificial Neural Networks is focused on the

near real-time classification of

voice acts in the distinction between speech and singing. One of the main contributions

of this project is represented by the creation of a unique dataset of laryngeal bioimpedance

measurements for the training of Artificial Neural Networks. The development of a self-calibrating

measurement system, alongside the derived dataset, aims to make the technology more employed in voice

disorder evaluation and pre-diagnosis as well as broadening the spectrum of possible applications.

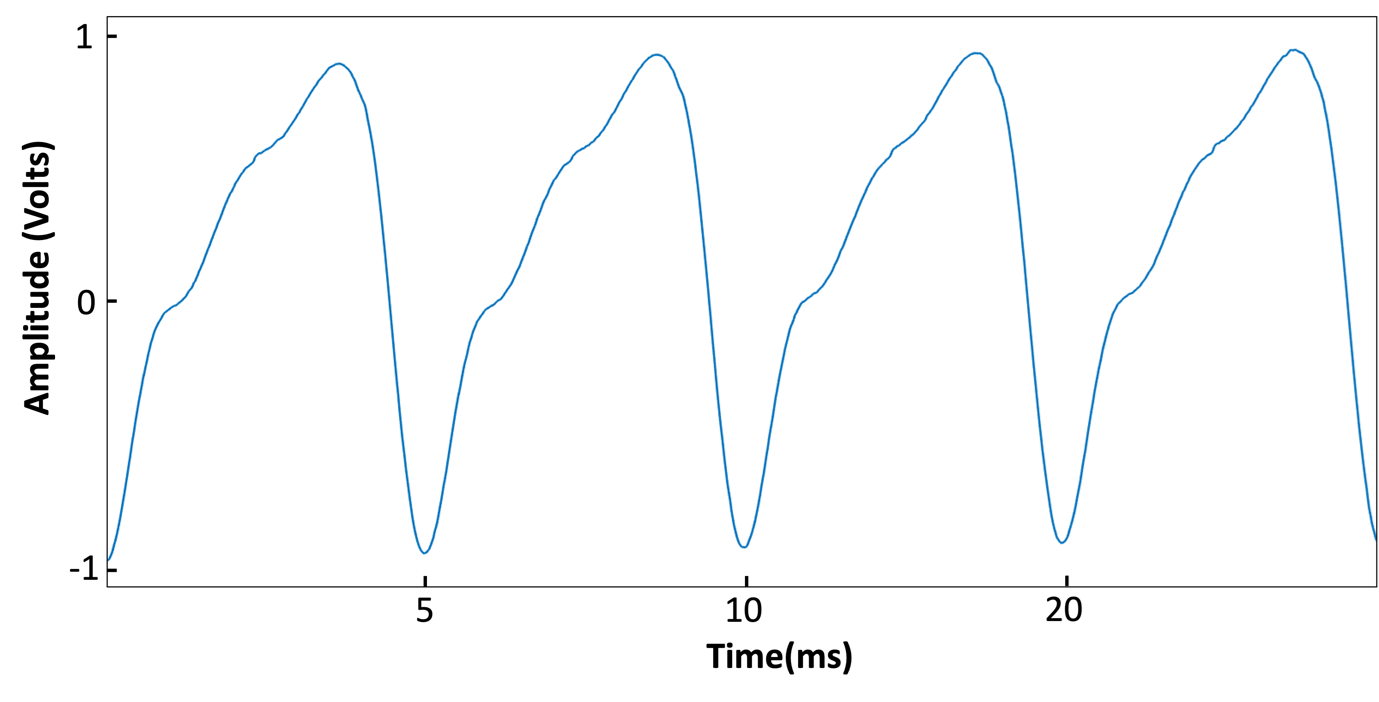

Dataset

A laryngeal bioimpedance measurement system developed by the team was used to record voice acts from different users.

The dataset is comprised of two sections:

singing voices and speaking voices.

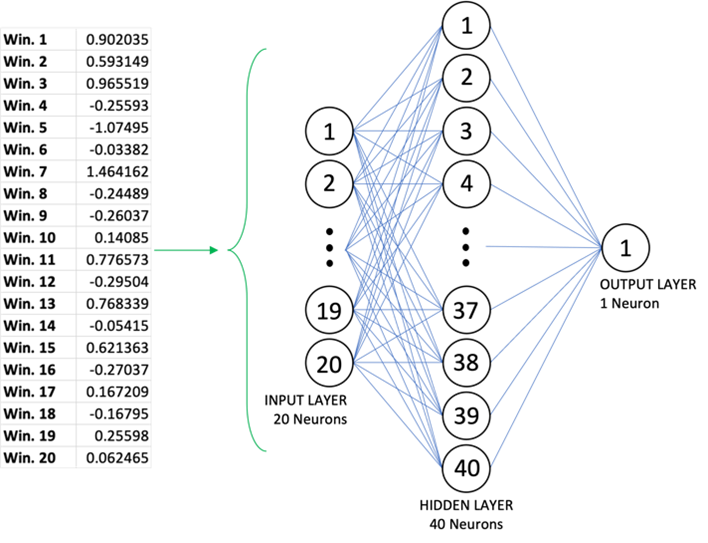

Network Architecture

For the processing of the data, we employed the use of Mel Frequency

Cepstrum Coefficients (MFFC). This delivers a series of frequency coefficients

over 20 frequency bands. The resulting array is the data being fed to the network.

The network is therefore comprised as follows:

Input layer: has 20 Neurons

1 Hidden layer: has 40 Neurons

Output Layer: has 1 Neuron for binary classification

The figure on the right shows the network architecture.

Implementation

The models were implemented in Python within the TensorFlow 2.0 framework.

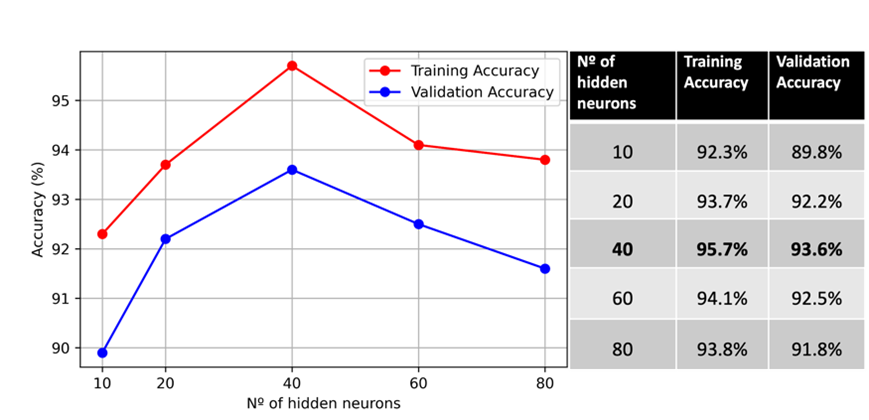

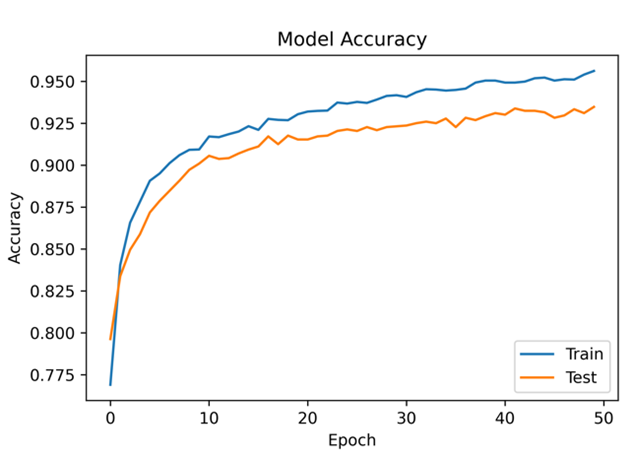

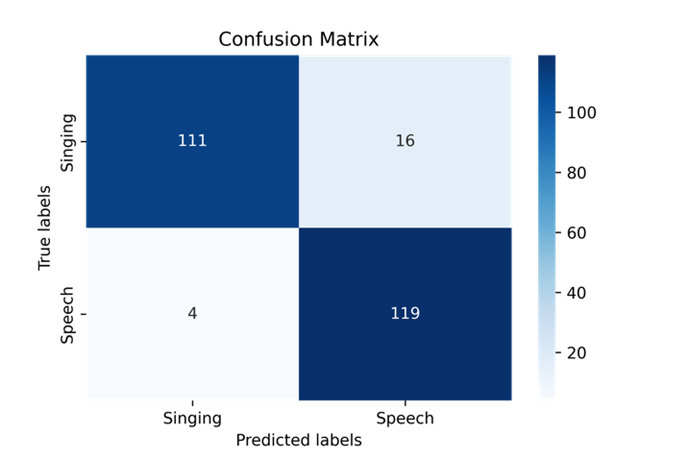

Performance assessment

Accuracy comparison by the number of neurons for the hidden layer

Model accuracy for 40 hidden neurons

Confusion matrix for “unseen” data